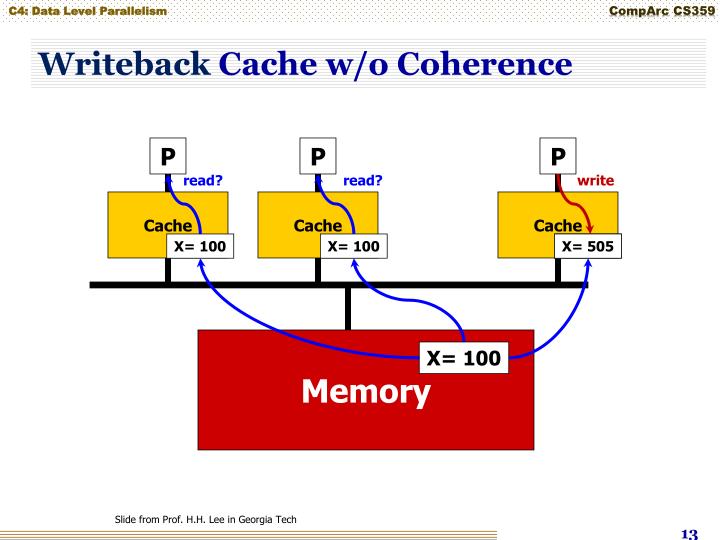

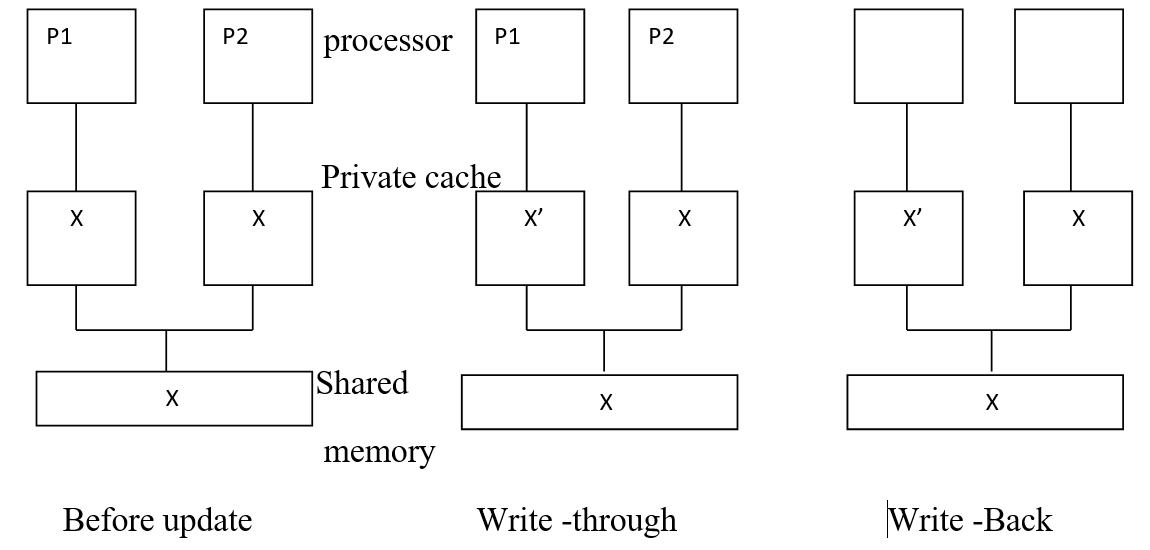

By creating a more complex architecture, and using a cache in a complex way, Write-Back destroys latency problems, and although it may require more overhead, it allows for better system growth, and fewer growing pains. The design philosophy of Write-Back is one that reflects the problem-solving that today’s advanced data handling systems bring to big tasks. For example, Write-Back systems can make use of RAID or redundant designs to keep data safe.Įven more elaborate systems will help the cache and the SAN or underlying storage disk to work with each other on as “as-needed basis,” delegating writes to either the deep storage or the cache depending on the disk’s workload. However, a local cache is private and so different. But with the right “cache protection,” Write-Back can really speed up an architecture with few down-sides. Cache-aside can be useful in this environment if an application repeatedly accesses the same data. It’s critically important to consider scenarios like power failure or other situations where critical data can be lost. Sophisticated systems will also need more than one solid state drive, which can add cost. memory measured in terabytes, not gigabytes) in order to handle large volumes of activity. If you’re running the same process on several servers, you will have a separate cache for each server. When the process dies, the cache dies with it. There are 3 types of caches: In-Memory Cache is used for when you want to implement cache in a single process. It helps when the cache has large amounts of memory (i.e. In-process Cache, Persistant in-process Cache, and Distributed Cache. With the right support, Write-Back can be the best method for multi-stage caching. This solves a lot of the latency problems, because the system doesn’t have to wait for those deep writes. When data comes in to be written, Write-Back will put the data into the cache, send an “all done” message, and keep the data for write to the storage disk later. Write-Back improves system outcomes in terms of speed – because the system doesn’t have to wait for writes to go to underlying storage. You’re only as fast as your slowest component, and Write-Through can critically hamstring application speed. However, Write-Through incurs latency as the I/O commit is determined by the speed of the permanent storage, which is no match for the speeds of CPU and networking. This method is commonly implemented for failure resiliency and to avoid implementing a failover or HA strategy with cache because data lives in both locations.

Before applications can proceed, the permanent storage must return the I/O commit back to the cache, then back to the applications. In the preceding discussion we came to understand how information can be stored in system memory using caching.Here’s the issue: Every write operation is done twice, in the cache and then in permanent storage. cache.Add(CacheKey, availableStocks, cacheItemPolicy).A good example is the use case that drove me to write CacheControl in the first place. cacheItemPolicy.AbsoluteExpiration = (1.0) I currently have it implemented locally for the redis backend.CacheItemPolicy cacheItemPolicy = new CacheItemPolicy().return (IEnumerable)cache.Get(CacheKey).ObjectCache cache = Memor圜ache.Default.public IEnumerable GetAvailableStocks().private const string CacheKey = "availableStocks".

Pizzas = (List)PS.GetAvailableStocks().List Pizzas= (List) PS.GetAvailableStocks().namespace CachingWithConsoleApplication.

#Local private cache write through code#

The following code is for storing information into cache memory. When the application does not find any cached data in cache memory, it will redirect to the Get default stock function. In this illustration I have kept some stock items in the cache memory for further use that is to understand the availability of a stock item in the future. Momor圜ache: This class also comes under and it represents the type that implements an in-cache memory.ĬacheItemPolicy: Represents a set of eviction and expiration details for a specific cache entry.Ĭode Work: Storying information into a cache. ObjectCache: The CacheItem class provides a logical representation of a cache entry, that can include regions using the RegionName property. In this illustration I am using the following classes: provides the feature for working with caching in C#. Caching with the C# language is very easy. For example, web browsers typically use a cache to make web pages load faster by storing a copy of the webpage files locally, such as on your local computer.Ĭaching is the process of storing data into cache. It essentially stores information that is likely to be used again. It is a type of memory that is relatively small but can be accessed very quickly. This article introduces implementation of caching with the C# language, before starting the discussion about code and the implementation of caching, I would like to explain about Caches and Caching.Ī cache is the most valuable feature that Microsoft provides.

0 kommentar(er)

0 kommentar(er)